AI Assisted Form and Table Extraction - ArticulateML

Square 9 offers a number of traditional OCR options, but also has options that leverage tooling in the areas of AI and ML. While more modern extraction tooling can be very good at decreasing setup time, it’s not always a complete solution. Customers may need to blend modern and traditional approaches to form a complete, all encompassing data capture platform.

Square 9’s most recent offering in the AI extraction space, Form and Table Extraction (ArticulateML), involves AI assisted extraction models that are largely application/document/form independent. ArticulateML works off of two core constructs: forms and tables.

ArticulateML differs from other AI driven extraction offerings from Square 9 like TransformAI. Most notably, it is not document specific and can operate on any document type. However, like TransformAI, successful extraction outcomes do have rules. For TAI, those rules revolve around document characteristics that are common among Invoices and Receipts. For ArticulateML, the rules revolve around data points being grouped into either key / value pairs and Tables.

Key / Value Pairs

Keys and their associated values are a core construct of extraction with ArticulateML. For the more technical audience, Key / Value pairs are a common type of data structure used in programing and scripts. In the context of a document however, Key / Value pairs can take on new meaning.

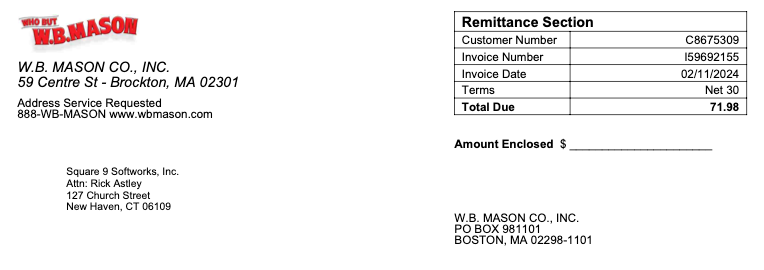

Consider the remittance section of a W.B. Mason invoice:

In a traditional extraction model, users are generally less concerned about keys and focus exclusively on values. It would be very simple to create an OCR template that extracted values for Customer Number, Invoice Number, Invoice Date, and Total Due. As your capture needs expand however, this model becomes fragile. Variances in scan resolution might impact positioning, and most certainly, similar documents produced by other vendors will introduce differences in layout. Square 9’s GlobalCapture offers a number of tools to help with such discrepancies in a more traditional manner, whether it be through Marker Zones, pattern matching, etc. ArticulateML takes a different approach.

Rather than using structured or semi-structured templates, ArticulateML leverages the power of AI to make assumptions about the text on a page. Rather than requiring a user to identify via a template that “C8675309” is the customer number, ArticulateML makes the assumptions automatically on behalf of the user. So in the AI assisted world, the OCR result wouldn’t be an arbitrary value “C8675309” that a user has told us should be inferred as Customer Number. Instead, the OCR result would resemble “Key: Customer Number, Value: C8675309”. The same pattern would hold true for all Key / Value pairs identified on the document. So in this case, you would expect to see results like:

"Key":Customer Number","Value":"C8675309"

"Key":"Invoice Number","Value":"I59692155"

"Key":"Invoice Date","Value":"02/11/2024"

"Key":"Terms","Value":"Net 30"

"Key":"Total Due","Value":"71.98"While the OCR results are extremely good, success does require adherence to a pattern of some kind. In the case of ArticulateML, each value is expected to have a descriptive key in its general vicinity. This does not mean keys and values need to be presented in a specific way visually, nor does it mean grid lines must be present in the document’s layout. It means that for each value one cares to extract, there must be a related key.

A case where this may present an issue is in the upper left corner of the W.B. Mason example above. In this case, both a phone number and a website address are present below the logo and address block.

In this example, there are two possible outcomes:

The phone number, the website address, or both simply don’t extract.

One or both values extract, but do so with a Key of “Address Service Requested”.

In either case, extracting one or both of these data points is likely better served with an alternate method. For example, a traditional zone extraction could be used to collect these data points. There are also other use case specific tools like Transform AI (which is tuned specifically for invoices and receipts) that looks specifically for data points that might match phone numbers and web addresses regardless of the presence of a Key.

Despite any limitations presented through the lack of a Key, ArticulateML offer’s a very powerful, very accurate approach to semi-structured and unstructured document extraction that fits well into a large set of extraction use cases.

Tables

In addition to Key / Value extraction, ArticulateML can be used to identify and extract tabular structured data from a document page. Because it is not bound to a document type, ArticulateML offers greater flexibility with tables and their associated values when compared to a feature like TAI.

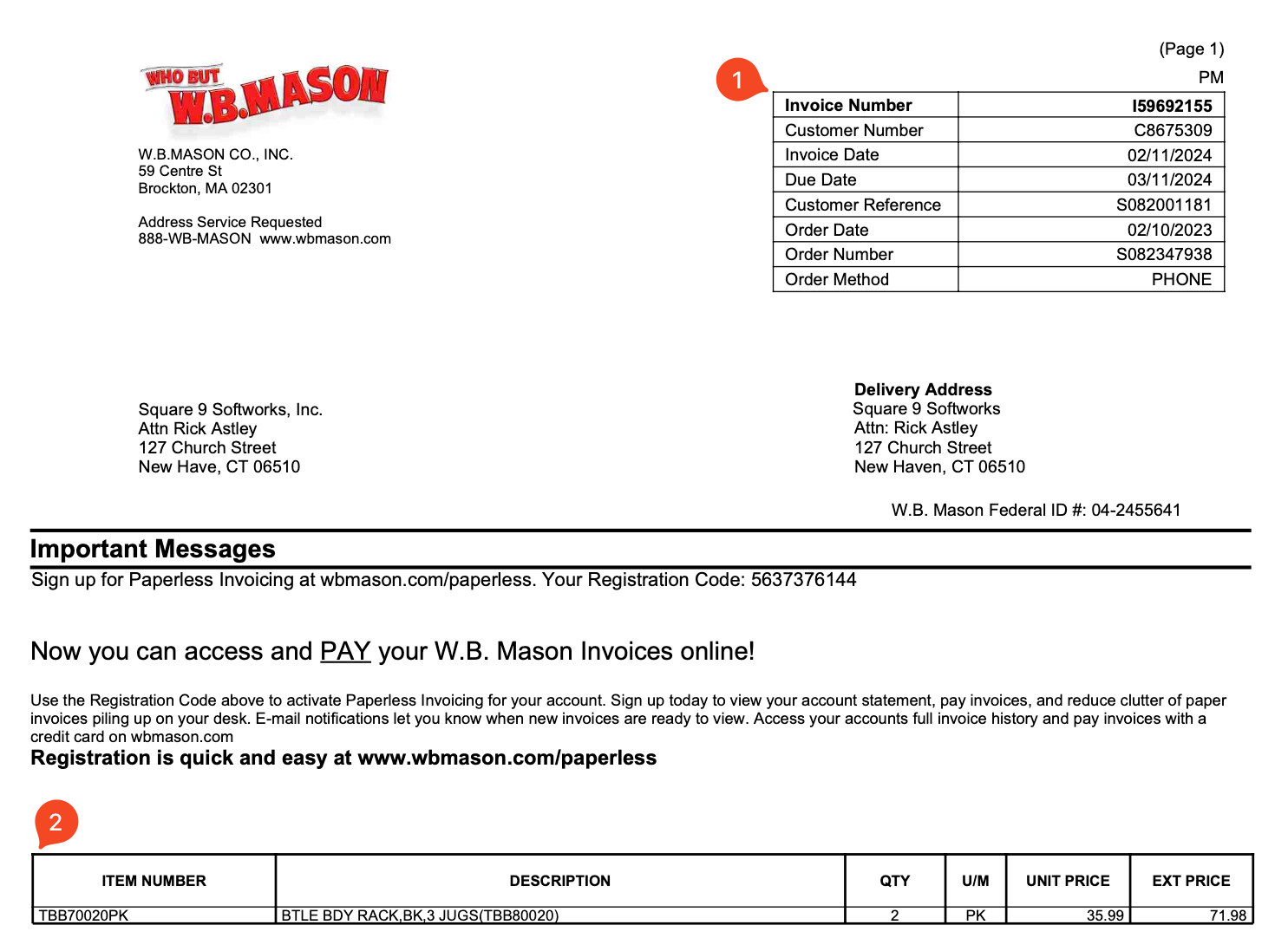

Continuing with the W.B. Mason example, tables are most commonly identified by rows and columns on a page:

While the human eye might be able to quickly determine that the table marked (2) is the table of interest for this specific document, the computer can not make such assumptions. In this image snippet, two discrete tables could be identified. Tables are most commonly and most successfully extracted when there are clearly defined rows and columns.

When ArticulateML executes on a document page, it will return the data organized by the table it was identified in. In the case above, we would expect two tables, one with 2 columns and 8 rows (table 1) and one with 2 rows and 6 columns (table 2).